Variational-Based Spatial–Temporal Approximation of Images in Remote Sensing

Abstract

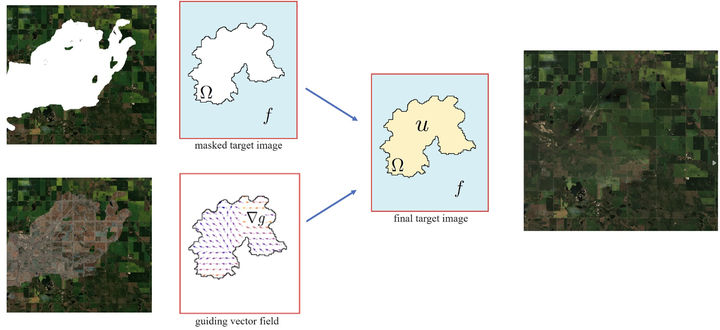

Cloud cover and shadows often hinder the accurate analysis of satellite images, impacting various applications, such as digital farming, land monitoring, environmental assessment, and urban planning. This paper presents a new approach to enhancing cloud-contaminated satellite images using a novel variational model for approximating the combination of the temporal and spatial components of satellite imagery. Leveraging this model, we derive two spatial-temporal methods containing an algorithm that computes the missing or contaminated data in cloudy images using the seamless Poisson blending method. In the first method, we extend the Poisson blending method to compute the spatial-temporal approximation. The pixel-wise temporal approximation is used as a guiding vector field for Poisson blending. In the second method, we use the rate of change in the temporal domain to divide the missing region into low-variation and high-variation sub-regions to better guide Poisson blending. In our second method, we provide a more general case by introducing a variation-based method that considers the temporal variation in specific regions to further refine the spatial–temporal approximation. The proposed methods have the same complexity as conventional methods, which is linear in the number of pixels in the region of interest. Our comprehensive evaluation demonstrates the effectiveness of the proposed methods through quantitative metrics, including the Root Mean Square Error (RMSE), Peak Signal-to-Noise Ratio (PSNR), and Structural Similarity Index Metric (SSIM), revealing significant improvements over existing approaches. Additionally, the evaluations offer insights into how to choose between our first and second methods for specific scenarios. This consideration takes into account the temporal and spatial resolutions, as well as the scale and extent of the missing data.